The recent movie “A Star Is Born” opens to a deafening concert scene, with veteran rocker Jack at center stage amidst cranked guitars and the crowd’s screams.

A disconcerting high-pitched sound sneaks in to hint at his impaired hearing, and later in the movie, his sound engineer offers Jack a set of in-ear monitors to protect what’s left of his hearing – controlling his exposure to the din and adding some missing highs to compensate for his loss. He refuses, afraid that the devices would lessen his connection with the audience and the music.

Researching this article, I’ve encountered taboos about even acknowledging the existence of hearing loss, especially among sound engineers. The ability to hear well and accurately is an important part of the skill set – the expectation of “golden ears.” Yet hearing impairment to some degree is a fact of life as we age, are exposed to a lifetime of sometimes high-level sound, inherit predispositions via our genes, and experience physical or sonic traumas and diseases.

Though the statistics vary, estimates of significant hearing loss range between 10 to 15 percent of the U.S. population, and for those over 60, diminished hearing ability is much more common. The higher frequencies are typically the first to go, and damaged hair cells within the cochlea, which sense and signal the different frequencies to the brain, do not grow back.

As audio professionals and musicians, at least some of us will need to seek practical solutions so we can continue doing what we love, using the skills we’ve spent a lifetime developing.

Here we’ll look at ways to protect the hearing capacity we still have, devices to improve our ability to hear speech and music, and tools that can help with making audio decisions – from tuning a guitar to tuning a sound system. We’ll also explore the significant differences between understanding speech and listening to music, and why and how devices such as hearing aids must be adjusted to more accurately function in a musical setting.

Speech Vs Music

The first difference between speech and music is one of sound pressure level (SPL). A normal conversational voice at a distance of 3 feet is about 60 dB, and a shout may reach 80 dB or so.

In a study of unamplified musical instruments at a distance of 3 meters by Dr. Marshall Chasin, a Toronto-based audiologist, (published in “Amplified Music Through Hearing Aids” by Chasin and Hockley), the output of a cello ranges from 80 to 104 dBA and a flute from 92 to 105 dBA, for example. This is before multiple instruments are run through a sound system or amplified instruments such as electric guitars and keyboards are used.

With speech, the majority of the acoustic energy is in the low and low-mid frequency range, peaking in the 500 Hz region, and rolling off at 5 to 6 dB per octave – so the higher frequency consonants are considerably quieter.

The physical mechanism of the human voice dictates this predictable pattern, no matter the language spoken. Musical instruments generate a variety of sound envelopes, from the sustained tones of a wind or bowed string instrument to the rapid impacts of percussion, and often have a higher proportion of mid- and high-frequency energy when compared with the voice.

A third element is the crest factor above the average signal level, which for speech is around 12 dB and for music is 16 to 18 dB – and in instantaneous measurements “may result in a 20-plus dB crest factor.” So all in all, music is much more dynamic, varied, and unpredictable than speech. Later we’ll explore these differences further as they relate to hearing aids and similar devices that address hearing losses.

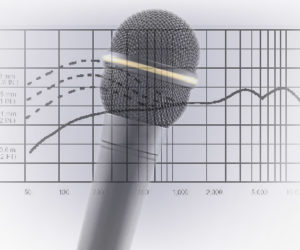

Perception Of Frequencies

Mick Conley, front of house engineer for Marty Stuart and the Fabulous Superlatives as well as for the current Sweethearts of the Rodeo tour, described a test he did while on the road with the band.

“Most of my guys are over 50,” explains Conley, “so I ran a 10 kHz tone through the system to see what happened. The one crew guy who was only about 30 years old was going crazy with the sound, but the other guys didn’t even hear it.”

On a similar note, when I worked with Electro-Voice we would bring high school science classes in to learn about audio. One experiment was to run a sine wave generator through a speaker system at different frequencies, starting high. It was very interesting to see how few could detect anything above 15 kHz, and that their older teachers didn’t begin hearing a sound until 10 to 12 kHz or lower. Loss in the highest frequency ranges as we age, called presbycusis, is the most common hearing impairment.

Noise-induced hearing loss is the second most common condition and happens to those exposed to continual high levels of sound from industrial sources, music, and so on. Even musicians in symphony orchestras often exhibit this loss. The typical pattern is a notch in hearing capability between 3 to 6 kHz, centered around 4 kHz.

The damage accumulates and worsens over months and years of exposure – resulting from the destruction of hair cells sensitive to this frequency range. Conley mentioned engineers who “have totally lost that area,” and thus overboost their mixes in that range and “rip your head off.”